Tech’s sexist algorithms and how to fix them

Roula Khalaf, Editor of the FT, selects her favourite stories in this weekly newsletter.

Are whisks innately womanly? Do grills have girlish associations? A study has revealed how an artificial intelligence (AI) algorithm learnt to associate women with pictures of the kitchen, based on a set of photos where the people in the kitchen were more likely to be women. As it reviewed more than 100,000 labelled images from around the internet, its biased association became stronger than that shown by the data set — amplifying rather than simply replicating bias.

The work by the University of Virginia was one of several studies showing that machine-learning systems can easily pick up biases if their design and data sets are not carefully considered.

Another study by researchers from Boston University and Microsoft using Google News data created an algorithm that carried through biases to label women as homemakers and men as software developers. Other experiments have examined the bias of translation software, which always describes doctors as men.

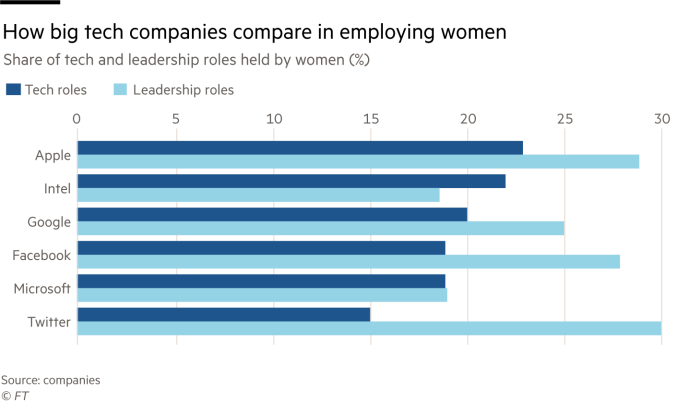

Given that algorithms are rapidly becoming responsible for more decisions about our lives, deployed by banks, healthcare companies and governments, built-in gender bias is a concern. The AI industry, however, employs an even lower proportion of women than the rest of the tech sector, and there are concerns that there are not enough female voices influencing machine learning.

Sara Wachter-Boettcher is the author of Technically Wrong, about how a white male technology industry has created products that neglect the needs of women and people of colour. She believes the focus on increasing diversity in technology should not just be for tech employees but for users, too.

“I think we don’t often talk about how it is bad for the technology itself, we talk about how it is bad for women’s careers,” Ms Wachter-Boettcher says. “Does it matter that the things that are profoundly changing and shaping our society are only being created by a small sliver of people with a small sliver of experiences?”

Technologists specialising in AI need to look very carefully at where their data sets come from and what biases exist, she argues. They should also examine failure rates — sometimes AI practitioners will be pleased with a low failure rate, but this is not good enough if it consistently fails the same group of people, Ms Wachter-Boettcher says.

“What is particularly dangerous is that we are moving all of this responsibility to a system and then just trusting the system will be unbiased,” she says, adding that it could be even “more dangerous” because it is hard to know why a machine has made a decision, and because it can get more and more biased over time.

Tess Posner is executive director of AI4ALL, a non-profit that aims to get more women and under-represented minorities interested in careers in AI. The organisation, started last year, runs summer camps for school students to learn more about AI at US universities.

Last summer’s students are teaching what they learnt to others, spreading the word about how to influence AI. One high-school student who had been through the summer programme won best paper at a conference on neural information-processing systems, where all of the other entrants were adults.

“One of the things that is most effective at engaging girls and under-represented populations is how this technology is going to solve problems in our world and in our community, rather than as a purely abstract math problem,” Ms Posner says.

“Some examples are using robotics and self-driving cars to assist elderly populations. Another one is making hospitals safe by using computer vision and natural language processing — all AI applications — to identify where to send aid after a natural disaster.”

The speed at which AI is progressing, however, means that it cannot wait for a new generation to correct potential biases.

Emma Byrne is head of advanced and AI-informed data analytics at 10x Banking, a fintech start-up in London. She believes it is important to have women in the room to point out problems with products that might not be as easy to spot for a white man who has not felt the same “visceral” impact of discrimination every day. Some men in AI still believe in a vision of technology as “pure” and “neutral”, she says.

However, it should not always be the duty of under-represented groups to push for less bias in AI, she says.

“One of the things that worries me about entering this career path for younger women and people of colour is I don’t want us to have to spend 20 per cent of our mental effort being the conscience or the common sense of our organisation,” she says.

Instead of leaving it to women to push their employers for bias-free and ethical AI, she thinks there may have to be some kind of legal framework for the technology.

“It is costly to hunt out and fix that bias. If you can rush to market, it is very tempting. You can’t rely on every organisation having these strong values to be sure that bias is eliminated in their product,” she says.

Comments